A simulation approach for autonomous heavy equipment safety

Are you working for a heavy equipment manufacturer or supplier? Or, like me, do you have a job related to the heavy equipment industry?

If so, you might be as excited as I am by releasing the Monarch new jewel: the world’s first fully autonomous electric tractor. The Monarch tractor combines electrification, automation, machine learning, and data analysis to enhance farmers’ operations and increase labor productivity and safety. As Praveen Penmetsa, Monarch Tractor co-founder and CEO, summarized: “Monarch Tractor is ushering in the digital transformation of farming with unprecedented intelligence, technology, and safety features.” In this context, a robust autonomous heavy equipment simulation platform is mandatory.

The objective of this article is to give insights into how a simulation-driven approach can support the development of heavy equipment autonomous operation systems—an approach going from sensors design to control system verification and validation.

The rise of autonomous operations in the heavy equipment industry

The heavy equipment customer’s main objective is to increase their machine efficiency in the field by producing more with less while keeping a strict eye on operator safety. But with machines that become more and more specific, more and more complex, guaranteeing people safety and machine integrity also depends a lot on the operator’s skills.

Partly or fully automated machine operation is a solution that most industry stakeholders are already investigating to increase safety and improve operability. Consequently, they can lead the digital revolution we face today.

With an increasing number of Advanced Driver Assistance Systems (ADAS) features to improve operator safety, such as remote-control driving/actuation or fully autonomous driving, the operator and vehicle interactions are being reshaped. Nevertheless, working on heavy equipment independent operations means partially or entirely replacing a skilled operator. These operators bring four main elements to their position: his/her senses, his/her brain, his/her experience, and the environment they interact with.

Therefore, in this article, I will deliver insights on the following key pillars to build a robust, comprehensive digital framework to support autonomous heavy equipment development.

- The role of simulation and the digital twin

- Natural environment simulation

- Physical sensor modeling

- Heavy equipment vehicle modeling

- Automate and accelerate the verification workflow

The role of simulation and the digital twin

Model-based systems engineering (MBSE) is an approach that fits perfectly with the development of autonomous vehicles – many companies have been using it for years. Today, we’ve entered a period of intense innovation, and manufacturers are under tremendous pressure to reduce program costs. We see more system autonomy to deliver new mission capabilities leading to more interactions between the thousands of systems, interfaces, and components on a single heavy machine.

When it comes to product design and performance engineering, the MBSE approach brings the so-called ‘Digital Twin’ of the product/vehicle and its environment. It allows engineers to explore the autonomous vehicle designs virtually and accelerate advanced control verification and validation, challenging for vehicle durability and expensive if pursued using a classical physical testing approach even if you work with the state-of-the-art data acquisition solution dedicated to ADAS.

Autonomous heavy equipment natural environment simulation

When it comes to the natural environment modeling, the simulation solution should be open and flexible enough to allow the import of any type of:

- off-road vehicle geometric models,

- geometric terrain with a relevant drop, slopes, and obstacles like rocks, trees, pedestrians, other vehicles,

- harsh conditions implied by the natural environment seasonality (rain, fog, dirt, day & night, sunset or sunrise, etc.)

This allows matching with conditions regularly encountered by heavy-equipment vehicles correctly.

To create a good virtual digital twin of the field environment, Simcenter Prescan, the solution developed by Siemens Digital Industries Software (DISW), ensures objects have a good enough geometric description and material property description by considering what is important for each sensor modality: camera, LiDAR, radar.

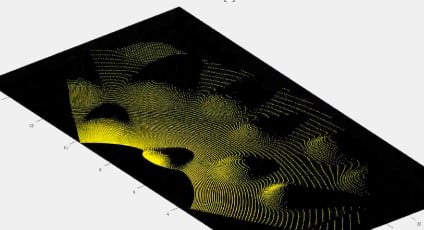

Additionally, Siemens DISW teams have been working in the past years on the coupling between its environment and its system simulation software and investing in improved ground modeling technology. The Simcenter Amesim Track generator feature is an example. This feature allows the implementation of bumpy soil, with a specific penetration coefficient, to consider the soil’s impact on autonomy sensor performance at vehicle low or high speed. The interactions between vehicle dynamics, traction on soft ground, and the powertrain are crucial.

Any relevant obstacles associated with the scenarios of interest can be implemented as part of the environment. The objective is to analyze the sensor’s detection and object recognition algorithm performances. Rocks, trees, buildings, crops, pedestrians, wildlife, infrastructures, etc., are elements that are relevant for our autonomous heavy equipment simulation environment, adding object motion when necessary.

Physical sensor modeling

When it comes to replacing the operator senses, one question summarizes the engineering challenges of implementing sensors like cameras, radars, LiDARs: How can you predict what the machine will or will not sense?

Consider that a physical sensor modeling solution is mandatory for a simulation platform dedicated to autonomous operations development. Indeed, optimization of the sensor design and their configuration can be done virtually to a large extent.

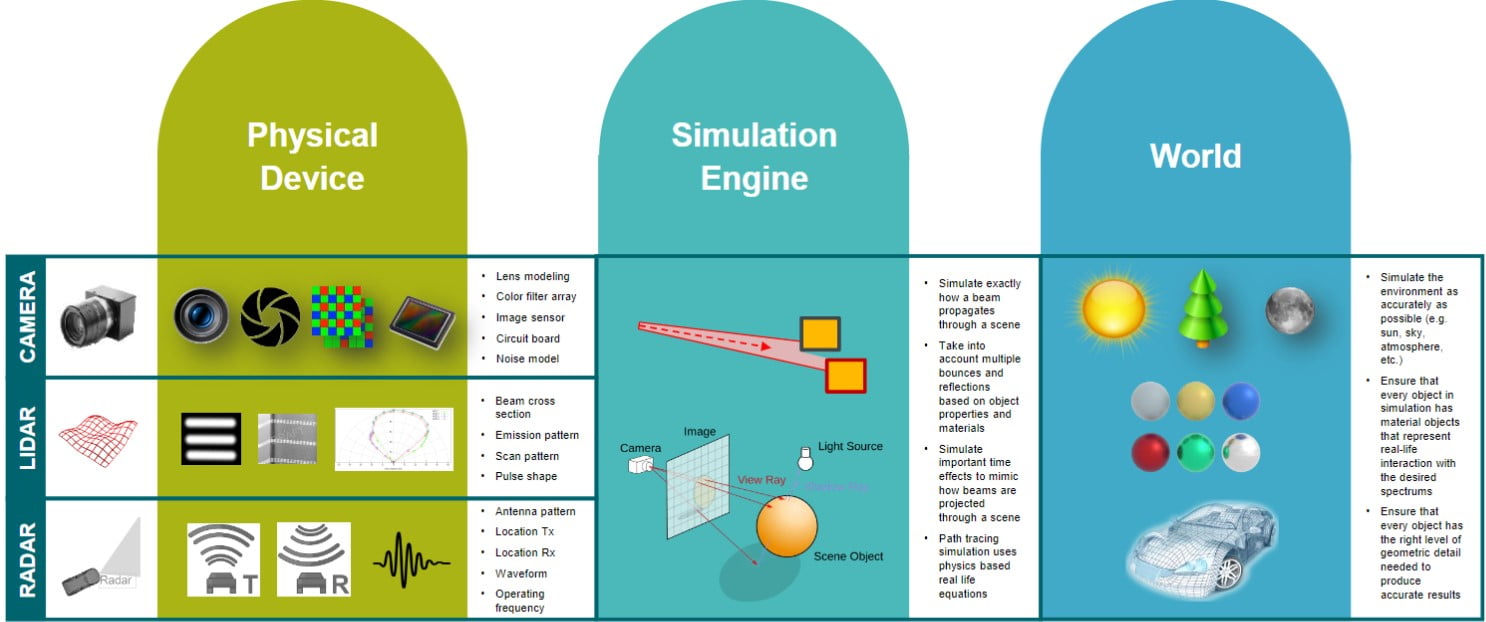

From necessary ground truth sensors (that identify all objects that appear in the sensor range), through probabilistic sensor models (that allow for fault insertion and filtering), up to physics-based sensor models (for raw data simulation), we can support engineers with balancing accuracy and computational time of sensors simulations based on mission/scenario requirements, for an improved engineering workflow efficiency. To have a robust sensor simulation, it is important to take into consideration three key components.

- The physical sensor device,

- The world,

- The simulation engine.

The simulation engine is what brings together the physical device and the world. It allows us to handle important effects based on real-life physical equations. For example, for the radar and the LiDAR simulation, an in-house ray-tracing framework accurately captures how light or a beam is propagated through a scene developed in Simcenter Prescan. Having a high accuracy simulation engine is important to ensure that developers, testers, integrators, and authorities can trust Siemens as a key partner.

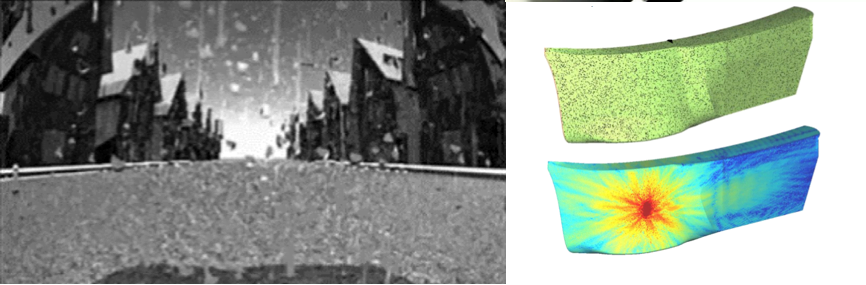

On top of the sensor modeling, engineering departments can use simulation to optimize the machine design for better sensor performances. For example, to prevent sensor soiling or too much electric/electromagnetic disturbance from a metallic bumper covered by mud or rain, both of which. Impact heavy equipment vehicles daily and yet not considered enough in current solution portfolios.

Heavy equipment vehicle modeling

A skilled operator has hours of machine operations background experience. To improve the performance of the vehicle’s autonomous operations, it is mandatory to include accurate dynamics within the control virtual training, verification, and validation process. From soil and tire model to the vehicle dynamics, the more physics you implement, the more robust your control will be, improving operability and safety.

The automation of the machine positively impacts other attributes. Indeed, it enables to control the energy distribution better, or yet powertrain actuation resulting in improved powertrain durability, keeping the device within the loads’ safety zone.

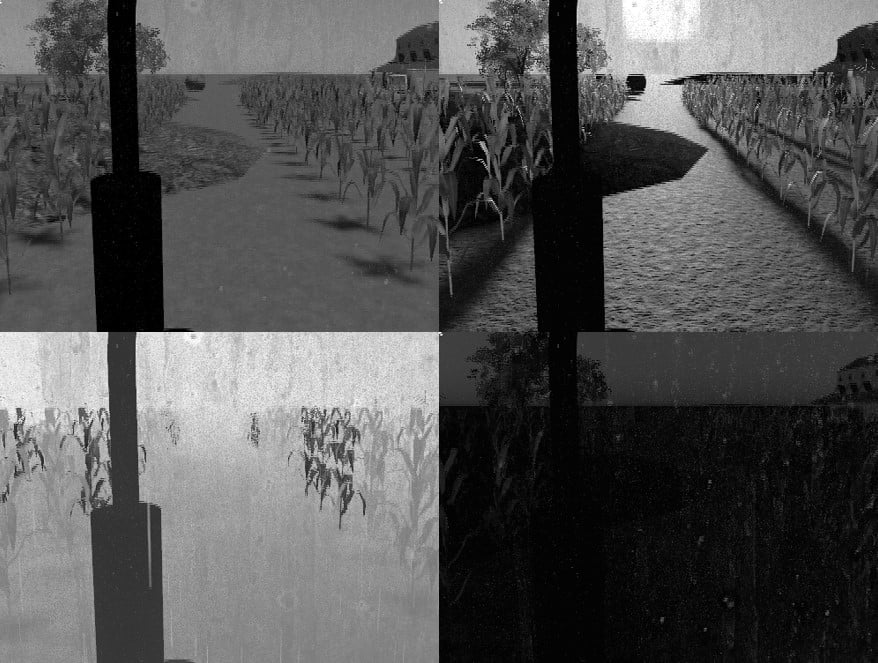

Simcenter simulation platforms, like Simcenter Amesim system simulation software and Simcenter 3D Motion, propose a large heavy equipment vehicle modeling capability: from construction equipment, transportation, agriculture, to military intervention autonomous robots, including the relevant vehicle and powertrain dynamics for each application. Simcenter Amesim, for example, can connect to the natural environment model using smart co-simulation FMI/FMU technology, as has been shown in the previous video of the autonomous tractor in the cornfield. Engineers can thus consider the realistic vehicle dynamics impact on sensor and algorithm performances along the development workflow.

The machine type does not limit itself to the ground vehicles. Siemens DISW also proposes the integration of autonomous drones, including flight dynamics. That capability is usually used for terrain recognition, crop identification, and supporting independent vehicle decision strategy.

Automate and accelerate the verification workflow

A key element leading to a significant reduction of the cost of autonomous heavy equipment simulation is the automation of the algorithm verification and validation workflow. Indeed, automating the validation of algorithms performance under various weather or lighting conditions or simply making sure that the workflow covers all possible scenarios allows an improved coverage of your perception algorithm validation, not talking about development time and cost-saving associated with this automation.

Sensor and vehicle configuration, sensor mounting, and vehicle design exploration can also be automated. Simcenter HEED, Siemens DISW design space exploration, and optimization software package allows this performance verification automation in the example above.

The objective is to deploy the virtual verification framework of ADAS and autonomous vehicle systems, allowing easy scenario generation, efficient critical case identification, and systematic virtual verification of requirements. In other words, automate and accelerate the scenario set-up, the interaction between the different simulations, and the post-processing of relevant metrics, for a quick and efficient Key Performance Indicators (KPIs) analysis.

Conclusion

To conclude, I would like to pass along the message that Siemens DISW, with its comprehensive Xcelerator portfolio of software, services, and applications, can support the development of your autonomous machine and its operations and virtually provide you with a simulation platform to validate your machine performances.

The Simcenter simulation platform allows the development of models from the environment to the sensor or machine dynamics to support advanced control algorithms. If you want to know more about our solution, please download the following pdf document. Also, feel free to join me during IVT Virtual Event on February 9, which includes a presentation track dedicated to “Technologies and strategies advancing autonomous solutions.”

Don’t hesitate to contact Thanh for advice on automation solutions for CAD / CAM / CAE / PLM / ERP / IT systems exclusively for SMEs.

Luu Phan Thanh (Tyler) Solutions Consultant at PLM Ecosystem Mobile +84 976 099 099

Web www.plmes.io Email tyler.luu@plmes.io