Circuit aging is emerging as a mandatory design concern across a swath of end markets, particularly in markets where advanced-node chips are expected to last for more than a few years. Some chipmakers view this as a competitive opportunity, but others are unsure we fully understand how those devices will age.

Aging is the latest in a long list of issues being pushed further left in the design flow. In the past, the fab hid many of these problems from design teams. But as margin shrinks at each new node, the onus has shifted to the design side to solve the problems earlier in the flow, as evidenced by the widespread adoption of back-end implementation software and sign-off tools. Power and thermal issues have become so constraining that they have been pushed even further forward in the development flow, starting at the architecture level.

Reliability is the latest concern to the surface, and while it may not be as prominent yet, it is no less important. Left unchecked, devices may not survive their intended lifetime; a problem is made worse because many of these devices are expected to last longer today than in the past. Reputations are at stake across the supply chain. On top of that, in-field replacements are expensive. And margining, the traditional approach to improving reliability makes those products uncompetitive at advanced nodes.

“Designers have long considered aging in high-reliability design areas,” says Stephen Crosher, director for SLM strategic programs at Synopsys. “Maybe they were designed for particular applications, such as automotive, or extreme stress environments. But it wasn’t necessarily a mainstream consideration for designers. Now it is transitioning into normal practice, and your standard designer will need to become aware of it.”

Several factors are changing. “In more advanced nodes and with increasing speed requirements, it has become much more of a critical design consideration,” says Ashraf Takla, President, and CEO of Mixel. “The aging impact needs to be evaluated and accounted for in timing budget early in the design stage, and verification needs to be done to ensure the final budget meets aging budgets.”

The importance of addressing aging has spread beyond just safety-critical and mission-critical applications. “Several of our IP providers, especially on the lower process technology nodes, are asking about aging models and the aging capability of EDA simulation,” says Greg Curtis, product manager for Analog FastSPICE at Siemens EDA. “It is not just automotive anymore. We see it in mobile communications; we see it in the Internet of Things. It is becoming a good practice that companies start looking at the aging of their IP.”

For many companies, this is unavoidable. “The largest variability factor that impacts lifetime is temperature,” says Brian Philofsky, principal technical marketing engineer at Xilinx. “Operating electronics at reduced temperatures often has a measurable effect on the circuits’ lifetime and aging. Another factor an engineer has control over within the device is the amount of current consumed. The higher current draw can significantly reduce lifetime due to electromigration and other undesirable effects. Unfortunately, modern circuit design is put at odds as compute density increases with every node shrink. Simultaneously, the reduced voltage has the impact of increasing current draw within the same power envelope. Over the last few years, the trend is higher operating currents at higher operating temperatures, making reliability more challenging.”

Geometry impact

While the underlying mechanisms that cause aging have not changed, their importance has become more significant at each new process node. “Reliability is related to devise size, and in particular the channel length,” says Jushan Xie, senior software architect at Cadence. “As the channel length gets shorter, the effects become more pronounced. The electric field inside the channel can become strong. Devices at 45nm and below have to consider reliability.”

That does not mean that designs at older nodes can safely ignore the impacts. “While it is more predominant in advanced technology nodes, such as 28nm and below, we have seen it on 40nm devices as well,” says Ahmed Ramadan, AMS foundry relations manager at Siemens EDA. “Recently, specialty foundries that are providing technologies on the 130nm and 180nm nodes are starting to consider providing aging models for their customers. This is coming because of pressure from the customers. It is a need they are seeing in the type of designs and applications that are working on.”

New device technologies are making it a larger issue. “At 28nm, people already were aware of some of the mechanisms of over-stressing devices,” says Oliver King, director of engineering at Synopsys. “Gates were very thin. They were susceptible to being overstressed. Along with continued shrinking of dimensions, designs switched to finFETs, which bring in new mechanisms such as the fin structure, and this just made it much more prominent.”

One of the big problems is that not everything scales equally in new geometries. “You are scaling the dimensions of the length and the width of the transistors,” says Siemens’s Ramadan. “But you’re not able to scale the gate oxide at the same pace. This is going to add additional stress to the device. You’re not able to scale the voltage at the same pace because scaling the voltage will not leave enough room above the device’s threshold voltage. This increases the amount of stress that the device will be facing.”

Gate scaling without voltage scaling is a huge issue. “If the average transistor is consuming the same amount of current as older transistors on larger nodes, then by scaling device density, you’ve increased the power density,” says Synopsys’ Crosher. “That relates to heat, and heat can be the big culprit in this equation. Self-heating in finFETs is also contributing to this. The transition from planar into finFET is where we start to see those sorts of stress issues applying to consumer products and broadening the reliability concerns. They are exposing themselves to those kinds of stress conditions, which need to be mitigated to try and get any reasonable lifetime from those devices.”

This gets even more complicated as more die are included in the same package. In the past, process-related issues could be solved with enough volume and time. But many of the advanced package implementations are unique, and the chips within them may age at different rates.

“The biggest problem we see is the huge number of implementation options,” said Andy Heinig, group leader for advanced system integration and department head for efficient electronics at Fraunhofer IIS’ Engineering of Adaptive Systems Division. “It’s not clear how you compare these different options. Chip design is easier if you have previous chip generations that are similar. But now we have a huge range of options, both for packages and the software within them.”

The number of things that can go wrong increases inside a package, as well. “There is mechanical stress, in addition to warpage, and the potential for the thermal mismatch,” said Marc Swinnen, director of product marketing at Ansys. “You also still have to get the power from Chip A to Chip B. Even if a pump fails, you still have to get the power through. But if you have a current spike as a result of this, other bumps can fail, too.”

Understanding how the various pieces go together requires much more in-depth analysis throughout the design process.

Aging models

The analysis starts with suitable models, and it can be tricky to model phenomena that may not be fully understood. “There’s an element of we don’t actually know whether the models are accurate enough,” says Synopsys’ King. “Ultimately, the models predict certain aging for a given circuit. And only really time will tell whether they were correct in that prediction. It is a complicated issue. It’s not just the aging mechanisms that we already know about. It’s also self-heating effects, process variations, Monte Carlo, and other effects that you need to consider as part of analyzing any given circuit. Maybe the models are right; maybe they’re not.”

It can be easy to dismiss the industry’s state, but it has to work with something. “We have not seen any indication that the models are inadequate,” says Mixel’s Takla. “That said, foundries, in cooperation with tool providers, are continuously tweaking their aging models to improve accuracy based on silicon measurements.”

While techniques like burn-in have been successful for traditional devices, it is not known how exactly they apply to these new effects. “You cannot wait for 10 years. You have to find ways to get the results you need quicker,” says Cadence’s Xie. “You will be using some theory or equations for acceleration, and you want to get some equivalent to 10 years of age in a short period of time. Calibration is important, and there are theories about how to accelerate aging.”

The industry is trying to reach a consensus. “I’ve been working with Compact Model Coalition (CMC) for more than 20 years,” says Ramadan. “It was probably 7 years ago when we first started having discussions about a standard aging model. At that time, we could not converge on a single standard for hot-carrier injection (HCI) or negative-bias thermal instability (NBTI) that would satisfy all foundries and design communities. They felt they had to make customization and modification to fit their processes.”

But that can leave device companies holding the ball. “We guarantee our commercial devices for 10 years of operation when maintained within its operating specifications,” says Xilinx’s Philofsky. “There are two situations that may require further consideration or analysis — a design requiring an operating lifetime greater than 10 years, or a design that may exceed the operating conditions and wishes to understand impacts to the lifetime. In these situations, we have simulation models, analysis tools, and reliability data to be used and applied to specific operating conditions for particular devices. This can fine-tune the lifetime specifications, sometimes allowing for a more efficient operating range. We’ve done this for decades and evolved our models to the point of having a high degree of confidence in them. Yet we are continuously improving them based on the latest theoretical and empirical data collected.”

Work continues within the CMC. “Still not yet there,” says Ramadan. “Every foundry and design house is creating its own models. Some of them are initially physics-based models. But many empirical formulations are also taking place to fit their current process and the target applications. How confident are we in these models? We should be confident enough for them to give a good estimate for the amount of degradation that’s going to happen on the device.”

Even with accurate models, there are other sources of inaccuracy. “The nature of aging simulation itself utilizes a lot of approximations,” Ramadan notes. “Consider that you run the simulation foraging over a short period of time, and then you do an extrapolation for the intended period. With this extrapolation, there’s a lot of approximations. But so far, we didn’t hear any complaints from customers that the customers’ models in terms of aging are far off. These things will need some years to validate. If you are actually running aging analysis today, you need maybe five years to make sure that what happened in real life is actually correct.”

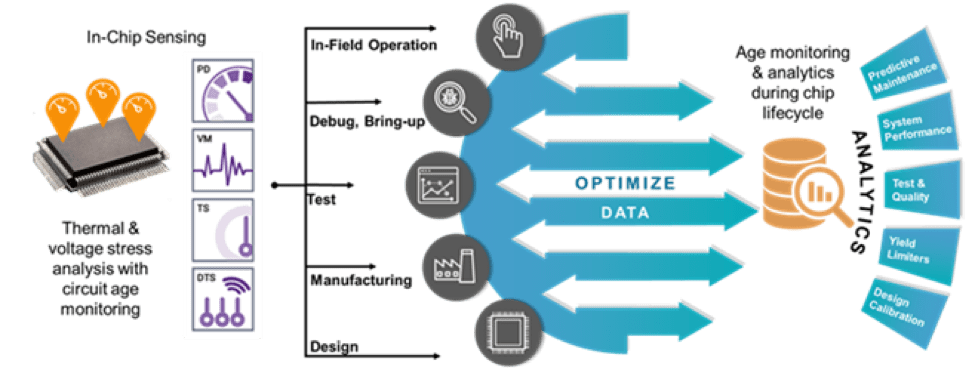

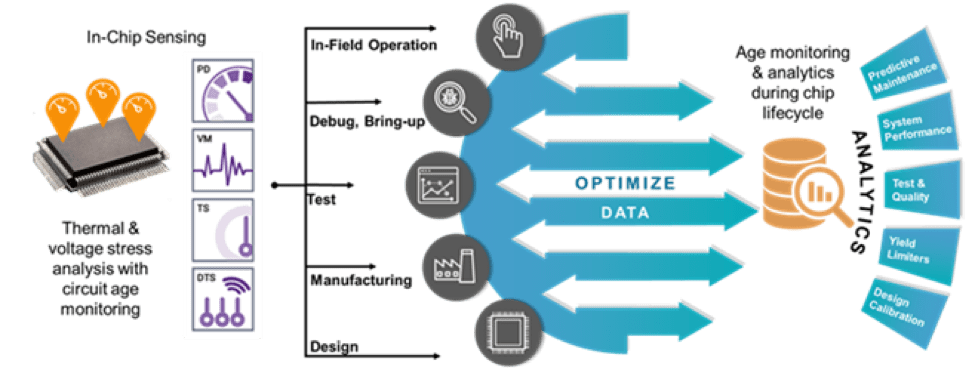

Aging cannot be considered on its own. “Variability also plays a part,” says Crosher. “It goes hand in hand with increased gate density, the manufacturing process, and greater variability. We haven’t seen them mature in the field for 15 years to know the aging impacts and effects. So that’s why there is a reliance, and a critical need, that within advanced devices, you need some form of embedded sensing to manage those issues. If you can measure the conditions of the chip in real-time and see how devices are degrading and how they’re aging, then they can take some mitigating steps to try and manage that.”

He’s not alone in identifying this as an issue. Fraunhofer’s Heinig pointed to system variation as one of the big challenges as more devices are integrated into systems and packages. Those devices are expected to last longer in the field. “There are no tools today to solve this problem,” he said. “It’s difficult to verify because, with software updates, the product also changes over time.”

Fig. 1: Adding aging sensors into the design, tied to lifecycle analysis. Source: Synopsys

Where to focus

Digital and analog will be affected differently, as will devices subject to frequent change — and in some cases, infrequent change. “Any place where there’s a lot of activity will be more sensitive to devise aging,” says Art Schaldenbrand, senior product manager at Cadence. “For devices, you can look at the clock tree and look at what is happening. Digital designs are sensitive to delay changes. The other place where this becomes a challenge is within analog designs. An example would be in a biased tree. The bias transistors moving and aging can potentially accelerate the aging of other devices in the bias network. There’s always going to be some different elements in the design, and you have to look at them a little bit different to be able to analyze the reliability.”

Designs that employ dynamic voltage and frequency scaling may have to be very careful. “Problems often arise when you are trying to optimize devices, maybe reducing supplies,” says King. “It may be tied to adaptive voltage schemes, and it is a question of how low you can go on the supply, with your logic still meeting timing. There could be designs that push the supply up when they detect that they need to. If performance drop off can’t be corrected, then at least a graceful bow-out may be an important design consideration.”

Sensitivity analysis is one way to approach the problem. “Let’s say that there is a certain design parameter they are concerned about, such as the gain for an amplifier,” says Ramadan. “They would want to see how sensitive each transistor is, contributing to change on that gain. Then they can consider the change in the threshold voltage or Ids due to aging. With sensitivity analysis, they can understand how big the impact of aging will be on specific devices in the design compared to the rest of the devices, and then start doing some guarding for those.”

But you have to be careful to consider all of the important areas. “There is a phenomenon called non-conductive stress,” says Cadence’s Schaldenbrand. “Consider a device such as a watchdog or a monitor. It will be sitting idle, potentially for years, and you want it to spring into action if there’s some condition that occurs. Even those circuits that you think are you’re just sitting there doing nothing are being stressed. They can age and potentially fail due to the aging that occurs while they’re sitting idle.”

How to tackle the issue

There are several ways to take the issues into account during the design, implementation, and sign-off development stages. Schaldenbrand lists three levels of analysis that can be performed:

- Monitoring conditions a device operates under. This is effectively monitoring things like the electric field by looking at device size and other factors. These checks are called device asserts. It may show that a device sees a lot of voltage, so it’s a place that is sensitive and a potential problem.

- Run analysis. You can conduct an aging analysis and say a device will operate under certain conditions for a given time period. At the end of life, it will have certain characteristics. If you do corner analysis or Monte Carlo analysis, you can also do aging analysis simultaneously.

- Gradual aging. This makes piece-wise approximations for an operating lifetime. Usually, designers are relatively experienced and know which blocks are more sensitive to those kinds of phenomena. You do not have to run those tests everywhere because they tend to be relatively expensive.

Process migration is becoming expensive. “For every process migration, say from 16nm to 10, to 7, to 5nm, all the way down to 3nm, every process node according to our customers requires three times more simulations because of the additional PVT corners they need to run,” says Siemens’ Curtis. “It puts a tremendous burden on their simulation needs to ensure first-time silicon success.”

But even this level of analysis does not provide certainty. “Reliability is statistical,” says Xie. “You need to look at it as a Monte Carlo problem. You have 100 devices, and they are identical when first fabricated. Even if you apply the same stress to those devices over 10 years and measure the device degradation, it will have a distribution. Most companies are not considering this distribution for relative aging.”

Nobody wants to design for the worst case. “When you embed sensors, you don’t have to predict aging,” says King. “You can measure it. You can see what is aging and make adjustments to that circuit or highlight that the chip is close to failure and decide to go into a safe state. That may enable you to pull a failing computer from a data center or ensure safe operation of your self-driving car.”

Built-in analysis can be changed over time. “Xilinx provides a system monitor circuit to allow users to monitor temperature and voltage to ensure safe operation,” says Philofsky. “Having programmability for the device will enable us to extend this measurement further and allow a more comprehensive view of reliability over many fixed-function devices.”

At the least, it means that margins can be squeezed. “The trend the industry was taking, before actually focusing on having good aging models and implementing an aging simulation flow, was to insert a lot of margins,” says Ramadan. “They were leaving a lot on the table, which they cannot afford anymore. By doing some aging simulations, they can tighten the margins to compete in the market without taking on too much risk. They will leave some, but not as much as they did before.”

There remains hope within the CMC. “Back in 2018, the CMC released a standard that supports an aging simulation flow through the Open Modeling Interface (OMI),” he says. “There is more development to include additional models in that flow. It has gained a lot of adoption from different design houses, and most importantly, from different foundries. This interface is simulator agnostic, meaning that foundries do not need to create a different interface for different simulators. We have seen a lot of pressure from design houses and foundries to provide an aging interface. And more and more foundries are currently starting to adopt the standard and OMI interface.”

Conclusion

While the mechanisms that contribute to aging are understood, the industry struggles with creating models that provide sufficient accuracy. Part of the problem is there has not been enough time to collect data that can be used to assess those models and to fine-tune them. That process is ongoing. Until the accuracy of those models is fully understood, design teams either have to leave some margin on the table or incorporate adaptive schemes into their devices to mitigate any aging problems when they arise.

Don’t hesitate to contact Thanh for advice on automation solutions for CAD / CAM / CAE / PLM / ERP / IT systems exclusively for SMEs.

Luu Phan Thanh (Tyler) Solutions Consultant at PLM Ecosystem Mobile +84 976 099 099

Web www.plmes.io Email tyler.luu@plmes.io